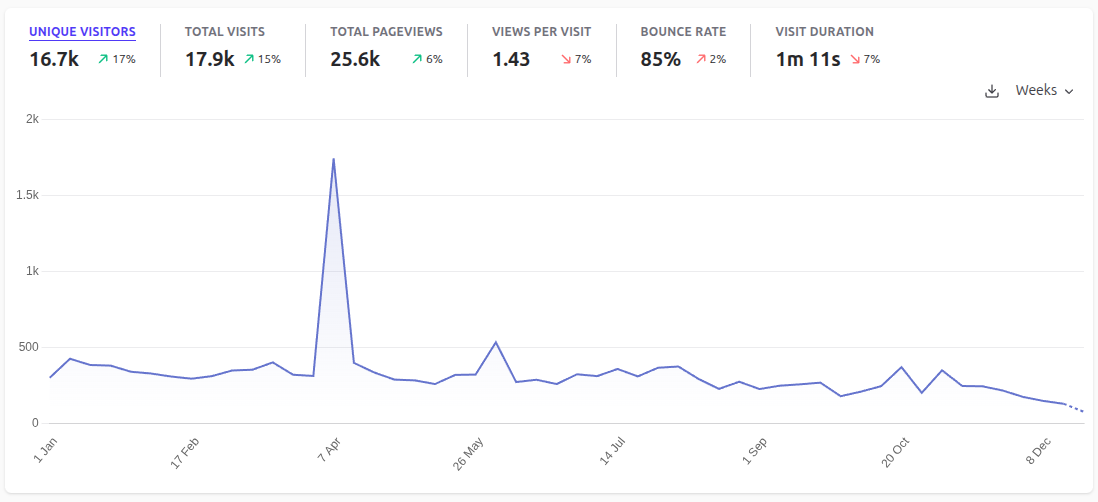

Results of 2025 for me and my blog

Blog metrics for 2025.

The New Year is near, so it's time to sum up the results of the year. Let me tell you what I was doing in 2025, how my plans for the past year went, and what my plans are for the coming year.

LLM agents are still unfit for real-world tasks

AI agents show their work to a programmer (c) ChatGPT & Hieronymus Bosch.

This week, I tested LLMs on real tasks from my day-to-day programming. Again.

Want a cool open source project in your portfolio?

A plea to the universe for a humane auth proxy (c) ChatGPT & Hieronymus Bosch.

As a mid-journey summary of my struggles with OAuth2/OIDC, I can say this: I haven't seen such amount of abstraction leaks and half-baked implementations, as in modern open-source authentication proxies, for a very long time — if ever.

Sure, it's great that such proxies exist at all and that there's something to choose from. It's also clear that they were made by enterprise developers to cover their very specific enterprise pains — most likely as side projects alongside their main products. But still… damn.

If you ever wanted a cool open source project to show off in your portfolio, grab Rust or Go and build a small auth proxy with OIDC and OAuth2 support that simply works. Something not aimed at corporations with Kubernetes clusters, but at small companies and indie developers who need to quickly plug a functionality gap without touching their app code. The situation where you have to modify backend code just to make the proxy work is pure madness.

People will bow to you :-) Especially now, when OAuth2 has suddenly become even more essential, since it's required by the Model Context Protocol.

Ory's sketchy authentication architecture

Tiendil trying to understand how Ory works (c) ChatGPT & Hieronymus Bosch.

I'm going to vent here — either that or shout into the void.

I've been diving into authentication a bit deeper than I wanted, and ran into the fact that what's borderline considered best practice now is having your auth proxy call out to external services to enrich the request with extra data for the backend.

For example, if you have an API that's available to both authenticated and anonymous users, Ory Oathkeeper (an auth proxy) can't add a header with the user ID: either you lock the API to authenticated users only, or you don't add the header.

The recommended "solution" is to create your own microservice (!): the proxy calls the microservice (for every request!), the microservice calls Ory Kratos (!) — the session store (among other things) — fetches the session, and returns a info for the proxy. In other words, to add one header, you chain two internal requests on every API call (or three, in theory Kratos can hit a database or cache).

That's absurd.

Reasoning LLMs are Wandering Solution Explorers

Illustration of the problem (c) ChatGPT

An interesting paper has appeared on arXiv, arguing that modern reasoning LLMs engage more in "random wandering in the solution space" than in "systematic problem-solving".

The main text is about 10 pages of fairly accessible reading — I recommend checking it out.

Here's what the authors did:

- Formalized the notions of "systematic exploration of the solution space" and "random wandering within the solution space".

- Built a very simple and illustrative model of how these mechanisms work.

- Using that model, they showed that random wandering can easily be mistaken for systematic exploration — especially when you have plenty of compute power.

- They also showed that the effectiveness of random wandering degrades sharply as the task complexity exceeds available resources.

- Formalized real-world problems as strictly defined tasks with structured solution spaces.

- Tested modern LLMs on these tasks and demonstrated that their behavior more closely resembles random wandering than systematic exploration.

I mostly agree with the authors' idea, though I wouldn't say the paper is flawless. There's a chance they didn't use LLMs quite correctly, and that the tasks were formalized in a way unfavorable to them.

However, the primary value of the paper isn't in its final conclusions, but in its excellent formalization of the solution-search process, the concepts of "random wandering" and "systematic exploration", and especially their simplified behavioral model.

If you're interested in the question of "whether LLMs actually think" (or, more broadly, in methods of problem-solving) this paper offers a promising angle of attack on the problem.