Migrating from GPT-3.5-turbo to GPT-4o-mini

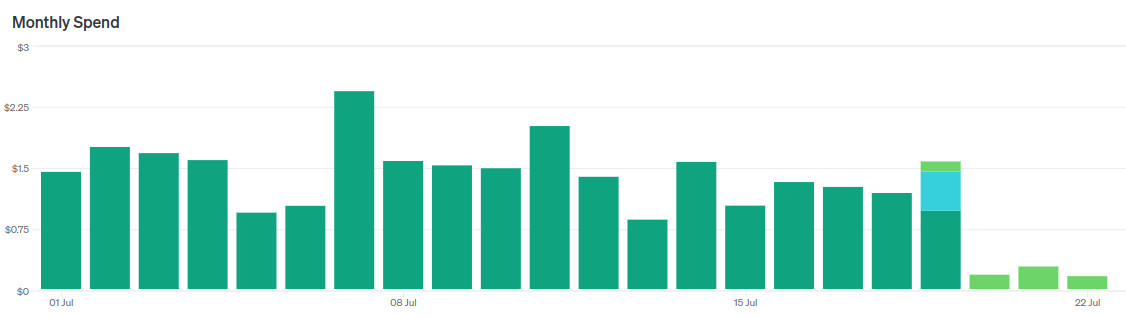

Guess when I switched models.

Recently OpenAI released GPT-4o-mini — a new flagship model for the cheap segment, as it were.

- They say it works "almost like" GPT-4o, sometimes even better than GPT-4.

- It is almost three times cheaper than GPT-3.5-turbo.

- Context size 128k tokens, against 16k for GPT-3.5-turbo.

Of course, I immediately started migrating my news reader to this model.

In short, it's a cool replacement for GPT-3.5-turbo. I immediately replaced two LLM agents with one without changing prompts, reducing costs by a factor of 5 without losing quality.

However, then I started tuning the prompt to make it even cooler and began to encounter nuances. Let me tell you about them.

My GPTs and prompt engineering

Ponies are doing prompt engineering (c) DALL-E

I've been using ChatGPT almost since the release of the fourth version (so for over a year now). Over this time, I've gotten pretty good at writing queries to this thing.

At some point, OpenAI allowed customizing chats with your text instructions (look for Customize ChatGPT in the menu). With time, I added more and more commands there, and recently, the size of the instructions exceeded the allowed maximum :-)

Also, it turned out that a universal instruction set is not such a good idea — you need to adjust instructions for different kinds of tasks, otherwise, they won't be as useful as they could be.

Therefore, I moved the instructions to GPT bots instead of customizing my chat. OpenAI calls them GPTs. They are the same chats but with a higher limit on the size of the customized instructions and the ability to upload additional texts as a knowledge base.

Someday, I'll make a GPT for this blog, but for now, I'll tell you about two GPTs I use daily:

- Expert — answers to questions.

- Abstractor — makes abstracts of texts.

For each, I'll provide the basic prompt with my comments.

By the way, OpenAI recently opened a GPT store, I'd be grateful if you liked mine GPTs. Of course, only if they are useful to you.