Places to discuss Feeds Fun

I continue developing my news reader: feeds.fun. To gather information and people together, I created several resources where you can discuss the project and find useful information:

- Blog blog.feeds.fun

- Discord server

- Reddit r/feedsfun

So far, there is no one and nothing there, but over time, there will definitely be news and people.

If you are interested in this project, join! I'll be glad to see you and will try to respond quickly to all questions.

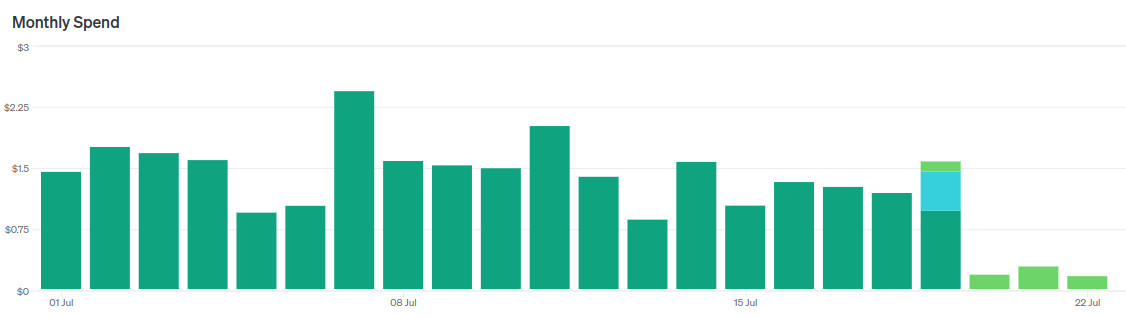

Migrating from GPT-3.5-turbo to GPT-4o-mini

Guess when I switched models.

Recently OpenAI released GPT-4o-mini — a new flagship model for the cheap segment, as it were.

- They say it works "almost like" GPT-4o, sometimes even better than GPT-4.

- It is almost three times cheaper than GPT-3.5-turbo.

- Context size 128k tokens, against 16k for GPT-3.5-turbo.

Of course, I immediately started migrating my news reader to this model.

In short, it's a cool replacement for GPT-3.5-turbo. I immediately replaced two LLM agents with one without changing prompts, reducing costs by a factor of 5 without losing quality.

However, then I started tuning the prompt to make it even cooler and began to encounter nuances. Let me tell you about them.

Computational mechanics & ε- (epsilon) machines

I found a few new concepts for tracking.

Computational mechanics

There is computational mechanics, which deals with numerical modeling of mechanical processes and there is an article about it on the wiki. This post is not about it.

This post is about computational mechanics, which studies abstractions of complex processes: how emergent behavior arises from the sum of the behavior / statistics of low-level processes. For example, why the Big Red Spot on Jupiter is stable, or why the result of a processor calculations does not depend on the properties of each electron in it.

ε- (epsilon) machine

The concept of a device that can exist in a finite set of states and can predict its future state (or state distribution?) based on the current one.

Computational mechanics allows (or should allow) to represent complex systems as a hierarchy of ε-machines. This creates a formal language for describing complex systems and emergent behavior.

For example, our brain can be represented as an ε-machine. Formally, the state of the brain never repeats (voltages on neurons, positions of neurotransmitter molecules, etc), but there are a huge number of situations when we do the same thing in the same conditions.

Here is a popular science explanation: https://www.quantamagazine.org/the-new-math-of-how-large-scale-order-emerges-20240610/

P.S. I will try to dig into scientific articles. I will tell you if I find something interesting and practical. P.P.S. I have long been thinking in the direction of a similar thing. Unfortunately, the twists of life do not allow me to seriously dig into science and mathematics. I am always happy when I encounter the results of other people's digging.

My GPTs and prompt engineering

Ponies are doing prompt engineering (c) DALL-E

I've been using ChatGPT almost since the release of the fourth version (so for over a year now). Over this time, I've gotten pretty good at writing queries to this thing.

At some point, OpenAI allowed customizing chats with your text instructions (look for Customize ChatGPT in the menu). With time, I added more and more commands there, and recently, the size of the instructions exceeded the allowed maximum :-)

Also, it turned out that a universal instruction set is not such a good idea — you need to adjust instructions for different kinds of tasks, otherwise, they won't be as useful as they could be.

Therefore, I moved the instructions to GPT bots instead of customizing my chat. OpenAI calls them GPTs. They are the same chats but with a higher limit on the size of the customized instructions and the ability to upload additional texts as a knowledge base.

Someday, I'll make a GPT for this blog, but for now, I'll tell you about two GPTs I use daily:

- Expert — answers to questions.

- Abstractor — makes abstracts of texts.

For each, I'll provide the basic prompt with my comments.

By the way, OpenAI recently opened a GPT store, I'd be grateful if you liked mine GPTs. Of course, only if they are useful to you.

About the book "The Net And The Butterfly"

I bought "The Net And The Butterfly" by mistake when I was in St. Petersburg about 5 years ago and organized a book-shopping day. I bought about 10 kilograms of books :-D, grabbed this one on autopilot without reading the contents. I thought the book would be about the network effect and the spreading of ideas, but it turned out to be about how to "manage" a brain relying on one of the neural networks in it. Which network? For the book and its content it does not matter at all.

My opinion of "The Net And The Butterfly" is twofold. On the one hand, I cannot deny its usefulness, on the other… the material could have been presented 100 times better and 3 times shorter. Sometimes, the authors walk on thin ice and risk falling into information peddling/marketing fraud.