Review of the book "The Signal and the Noise"

Nate Silver — the author of "The Signal and the Noise" — is widely known for his successful forecasts, such as the US elections. It is not surprising that the book became a bestseller.

As you might guess, the book is about forecasts. More precisely, it is about approaches to forecasting, complexities, errors, misconceptions, and so on.

As usual, I expected a more theoretical approach, in the spirit of Scale [ru], but the author chose a different path and presented his ideas through the analysis of practical cases: one case per chapter. Each chapter describes a significant task, such as weather forecasting, and provides several prisms for looking at building forecasts. This certainly makes the material more accessible, but personally, I would like more systematics and theory.

Because of the case studies approach, it isn't easy to make a brief summary of the book. It is possible, and it would even be interesting to try, but the amount of work is too large — the author did not intend to provide a coherent system or a short set of basic theses.

Therefore, I will review the book as a whole, provide an approximate list of prisms, and list some cool facts.

Computational mechanics & ε- (epsilon) machines

I found a few new concepts for tracking.

Computational mechanics

There is computational mechanics, which deals with numerical modeling of mechanical processes and there is an article about it on the wiki. This post is not about it.

This post is about computational mechanics, which studies abstractions of complex processes: how emergent behavior arises from the sum of the behavior / statistics of low-level processes. For example, why the Big Red Spot on Jupiter is stable, or why the result of a processor calculations does not depend on the properties of each electron in it.

ε- (epsilon) machine

The concept of a device that can exist in a finite set of states and can predict its future state (or state distribution?) based on the current one.

Computational mechanics allows (or should allow) to represent complex systems as a hierarchy of ε-machines. This creates a formal language for describing complex systems and emergent behavior.

For example, our brain can be represented as an ε-machine. Formally, the state of the brain never repeats (voltages on neurons, positions of neurotransmitter molecules, etc), but there are a huge number of situations when we do the same thing in the same conditions.

Here is a popular science explanation: https://www.quantamagazine.org/the-new-math-of-how-large-scale-order-emerges-20240610/

P.S. I will try to dig into scientific articles. I will tell you if I find something interesting and practical. P.P.S. I have long been thinking in the direction of a similar thing. Unfortunately, the twists of life do not allow me to seriously dig into science and mathematics. I am always happy when I encounter the results of other people's digging.

My GPTs and prompt engineering

Ponies are doing prompt engineering (c) DALL-E

I've been using ChatGPT almost since the release of the fourth version (so for over a year now). Over this time, I've gotten pretty good at writing queries to this thing.

At some point, OpenAI allowed customizing chats with your text instructions (look for Customize ChatGPT in the menu). With time, I added more and more commands there, and recently, the size of the instructions exceeded the allowed maximum :-)

Also, it turned out that a universal instruction set is not such a good idea — you need to adjust instructions for different kinds of tasks, otherwise, they won't be as useful as they could be.

Therefore, I moved the instructions to GPT bots instead of customizing my chat. OpenAI calls them GPTs. They are the same chats but with a higher limit on the size of the customized instructions and the ability to upload additional texts as a knowledge base.

Someday, I'll make a GPT for this blog, but for now, I'll tell you about two GPTs I use daily:

- Expert — answers to questions.

- Abstractor — makes abstracts of texts.

For each, I'll provide the basic prompt with my comments.

By the way, OpenAI recently opened a GPT store, I'd be grateful if you liked mine GPTs. Of course, only if they are useful to you.

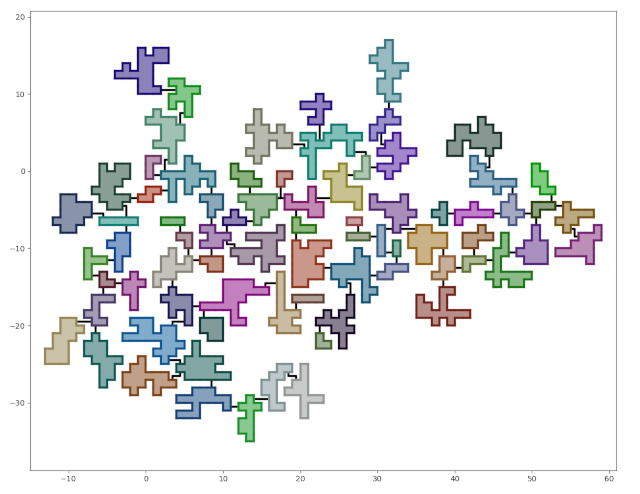

Dungeon generation — from simple to complex

What we should get.

This is a translation of a post from 2020

This is a step-by-step guide to generating dungeons in Python. If you are not a programmer, you may be interested in reading how to design a dungeon [ru].

I spent a few evenings testing the idea of generating space bases.. The space base didn't work out, but the result looks like a good dungeon. Since I went from simple to complex and didn't use rocket science, I converted the code into a tutorial on generating dungeons in Python.

By the end of this tutorial, we will have a dungeon generator with the following features:

- The rooms will be connected by corridors.

- The dungeon will have the shape of a tree. Adding cycles will be elementary, but I'll leave it as homework.

- The number of rooms, their size, and the "branching level" will be configurable.

- The dungeon will be placed on a grid and consist of square cells.

The entire code can be found on github.

There won't be any code in the post — all the approaches used can be easily described in words. At least, I think so.

Each development stage has a corresponding tag in the repository, containing the code at the end of the stage.

The aim of this tutorial is not only to teach how to generate dungeons but to demonstrate that seemingly complex tasks can be simple when properly broken down into subtasks.

«Slay The Princess» — combinatorial narrative

My favorite version of the Princess.

It's hard to impress me as a player and even harder as a game developer. The last time it happened with Owlcat Games in Pathfinder: Kingmaker, when they added a timer to the game's plot.

But Black Tabby Games managed to do it. And they did it not with some technological complexity but with a visual novel on a standard engine (RenPy), which is cool in itself.

I'll share a couple of thoughts about the game and its narrative structure, while I'm still under the impression. I need to think about how to adapt this approach to my projects.

ATTENTION: SPOILERS!

If you haven't played Slay The Princess yet, I strongly recommend you to catch up — the game takes 3-4 hours. You'll not regret it.