Unexpectedly participated in a class action lawsuit in the USA

Recently, I unexpectedly encountered a justice system in the USA.

- In 2017-2018, when there was a crypto boom, I invested a little in a mining startup: I purchased their tokens and one hardware unit.

- The startup went up and began to build a mega farm, but it didn't work out — the fall of Bitcoin coincided with their spending peak, the money ran out, and the company went bankrupt. It's funny that a month or two after filing for bankruptcy, bitcoin played back everything. Sometimes you're just unlucky :-)

- I had already written off the lost money, of course. I acted on the rule "invest only 10% of the income you don't mind losing."

- Since everything legally happened in the USA, people gathered there and filed a class action lawsuit.

- I received a letter stating that I would be automatically among the plaintiffs if I did not refuse. I did not refuse; when else would I get an opportunity to participate in a class action?

- Everything calmed down until 2024.

- In the spring, another letter came: "Confirm the ownership of the tokens and indicate their quantity. We won and will share the remaining among all token holders proportionally, minus a healthy commission to the lawyers."

- But how do I confirm? More than five years have passed. The Belarusian bank account is closed, the company's admin panel is unavailable, and there was no direct transaction in the blockchain—I paid in Bitcoin directly from some exchange (although it is not recommended to do so).

- I found an email from the company confirming I bought tokens (without the amount) and printed it as a PDF. I attached it to the application with screenshots of the transactions from the exchange for the related period. I gave the address of my current wallet, where these tokens lie dead weight. I sent everything.

- Today, I received $700 in my bank account. Of course, this is not all the lost money, nearly 25%, maybe slightly more.

What conclusions can be drawn from this:

- Sometimes, you just don't get lucky in your business.

- Keep all emails. You never know what and when will come in handy.

- Class action lawsuits work and do it in an interesting way.

- Justice in the USA works slowly but, apparently, inevitably and unexpectedly (for me) loyally to minor participants in the conflict. At least sometimes.

Preparing a business plan for a game on Steam

Earning millions is easier than ever. I'll tell you how :-D

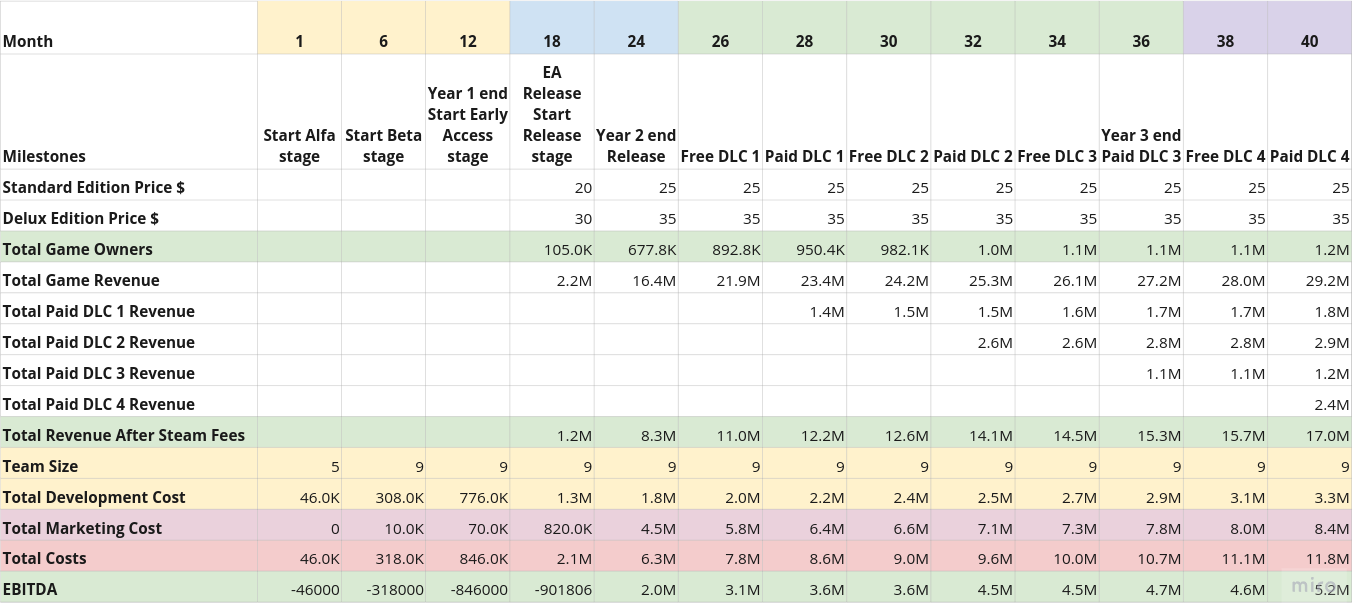

When I posted my final presentation [ru] (slides) for World Builders 2023 (my posts, site), I promised to tell how I made a roadmap and a financial model for the game. So, here they are.

At the end of this post, we will have:

- A brief strategy of our company: what we do, how, and why.

- A table with our beacons — successful games roughly similar to what we want to make. Similar in gameplay, team size, budget, etc.

- A composition of the team we need to assemble.

- A roadmap — a development plan for our game.

- An outline of our marketing strategy.

- A financial model — how much we will spend, how much we will earn.

- A large number of my caveats throughout the post.

- Jokes and

I hopewitty remarks.

All the final documents can be found here.

Grainau: hiking and beer at 3000 meters

How it all looks from the ground.

For her vacation, Yuliya decided to show me the beautiful German mountains and took me for a couple of days to Grainau — it's a piece of Bavaria that's almost like Switzerland. At least, it is similar to the pictures of Switzerland that I've seen :-D

In short, it's a lovely place with a measured pace of life. If you need to catch your breath, calm your nerves, and enjoy nature, then this is the place for you. But if you can't live without parties, you'll get bored quickly.

What's there:

- The highest mountain in Germany plus a couple of glaciers.

- There's skiing in winter. If you really need it, you can find a place to ski in summer, but the descent is short, and the lifts are turned off.

- A large clean lake and a couple of smaller ones.

- A huge number of trails for hiking.

- A huge number of waterfalls, streams, and a couple of mountain rivers.

- Restaurants with beer.

- Beautiful fallen trees in the forests, private property, fences, cows with bells, and "racing tractors" (I don't know how to name this phenomenon better, but tractors are moving fast there :-D).

This is briefly, and now in detail.

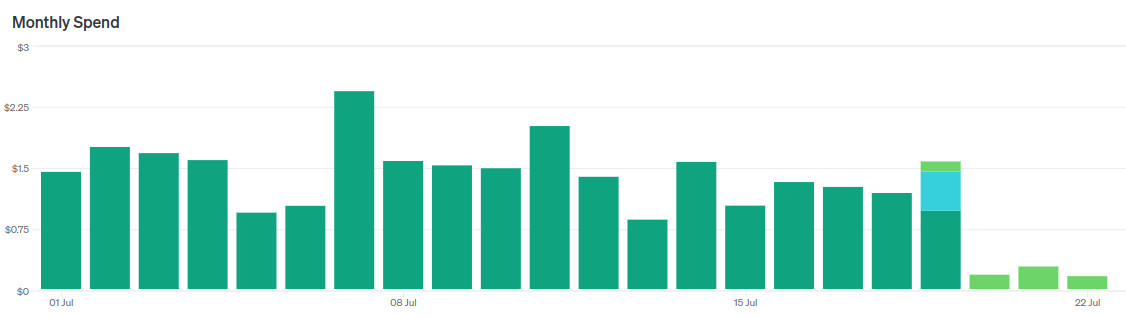

Migrating from GPT-3.5-turbo to GPT-4o-mini

Guess when I switched models.

Recently OpenAI released GPT-4o-mini — a new flagship model for the cheap segment, as it were.

- They say it works "almost like" GPT-4o, sometimes even better than GPT-4.

- It is almost three times cheaper than GPT-3.5-turbo.

- Context size 128k tokens, against 16k for GPT-3.5-turbo.

Of course, I immediately started migrating my news reader to this model.

In short, it's a cool replacement for GPT-3.5-turbo. I immediately replaced two LLM agents with one without changing prompts, reducing costs by a factor of 5 without losing quality.

However, then I started tuning the prompt to make it even cooler and began to encounter nuances. Let me tell you about them.

Review of the book "The Signal and the Noise"

Nate Silver — the author of "The Signal and the Noise" — is widely known for his successful forecasts, such as the US elections. It is not surprising that the book became a bestseller.

As you might guess, the book is about forecasts. More precisely, it is about approaches to forecasting, complexities, errors, misconceptions, and so on.

As usual, I expected a more theoretical approach, in the spirit of Scale [ru], but the author chose a different path and presented his ideas through the analysis of practical cases: one case per chapter. Each chapter describes a significant task, such as weather forecasting, and provides several prisms for looking at building forecasts. This certainly makes the material more accessible, but personally, I would like more systematics and theory.

Because of the case studies approach, it isn't easy to make a brief summary of the book. It is possible, and it would even be interesting to try, but the amount of work is too large — the author did not intend to provide a coherent system or a short set of basic theses.

Therefore, I will review the book as a whole, provide an approximate list of prisms, and list some cool facts.