Summary of GPT-5 presentation without marketing pixie dust en ru

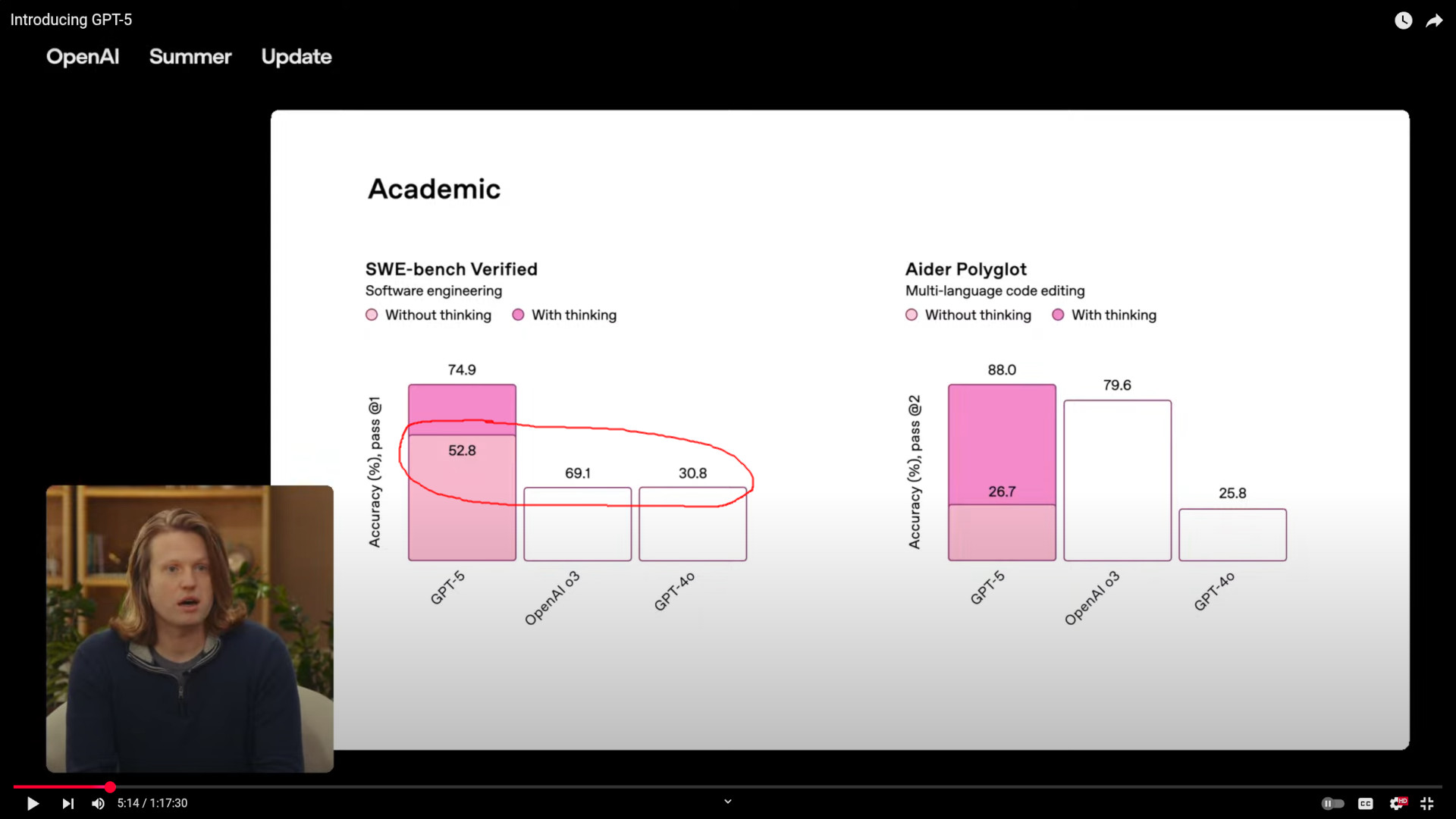

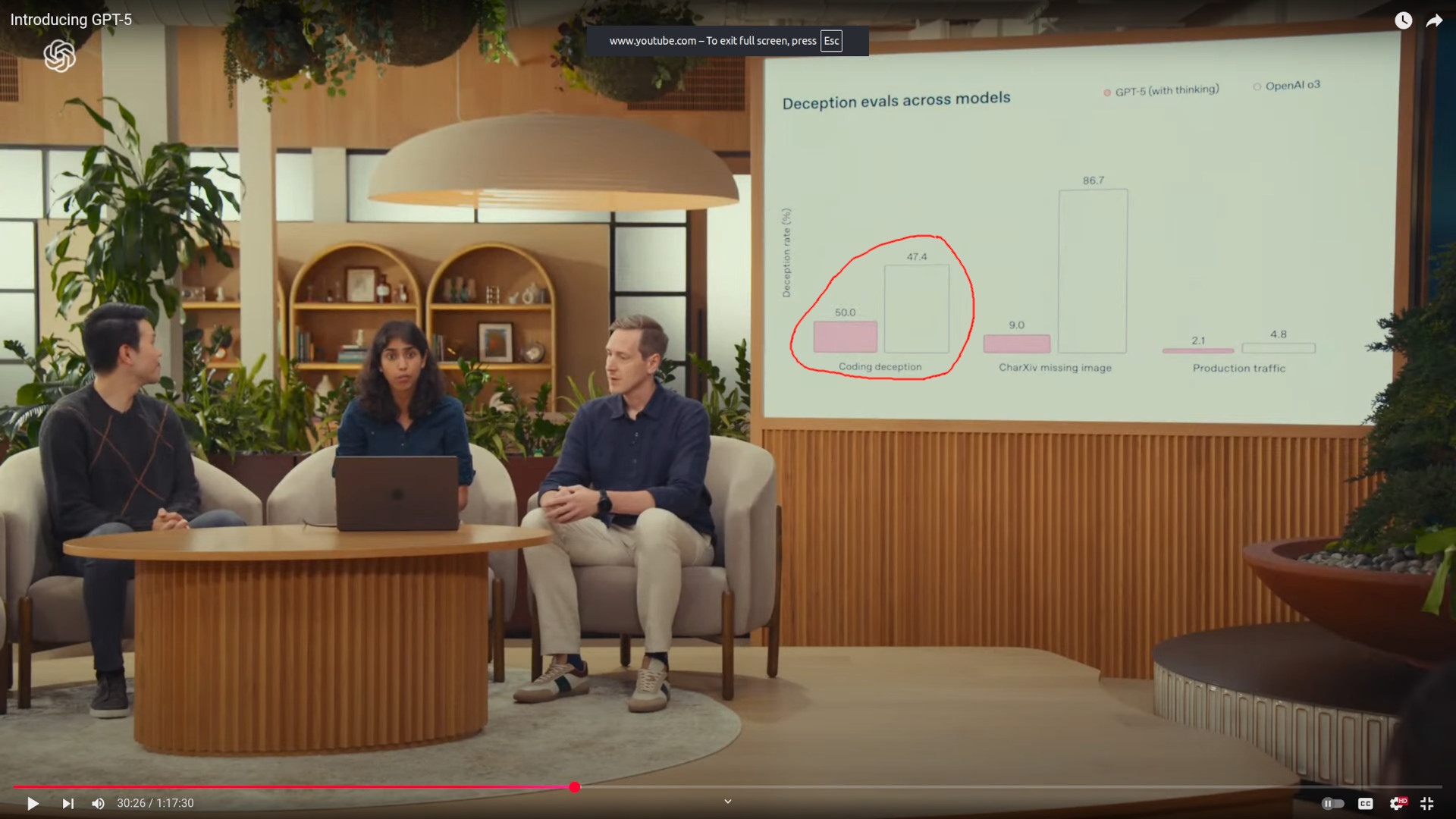

Let he who thinks this is a coincidence cast the first stone at me.

For the record, in the scientific community, disputes over such plots can go as far as ostracizing the authors. But this is business, marketing — so it's fine, right?

- The LLM has become, on average, slightly (by a few percent) smarter.

- In some aspects, the LLM has become significantly smarter (by tens of percent).

- In some aspects, the LLM has become a bit dumber(!).

- The API has become cheaper, or not, — it depends on how you use it.

- OpenAI is deliberately misleading people about the capabilities of the new model — see the screenshots.

=> The world's LLM leader has begun to bog down, and the rest will likely follow.

When everything is going well and you make another breakthrough, you don't cheat with the pictures.

This doesn't mean that progress has stopped. Still, it does mean that the growth of technology is transitioning from the explosive phase of "discovering new things" to a more or less steady phase of "optimizing technologies in a million directions, where there are only enough hands for a hundred."

We are close to the "disillusionment" phase of the Gartner hype cycle.

In this regard, I would like to remind you of my AI future prognosis — so far, it's holding up.

Let me add a few more thoughts.

Global projects like Stargate will not affect the situation to the extent their creators hope. The problem isn't a shortage of hardware or data centers, but the limitations of model and hardware architectures. These problems can't be solved by building more data centers — they're solved by scaling R&D through:

- training and hiring specialists;

- launching high-risk experiments;

The first point is more or less fine (the hype helps, and in 5–10 years we'll have plenty of young specialists), but the second one isn't. The current leaders have grown too big and too dependent on investors (in the West) and the state (in China) — they simply can't take risks. Neither OpenAI, nor Google, nor Meta can now make a sharp pivot toward any technology that is architecturally alternative to today's LLMs, no matter how promising it might be. For the next phase of explosive growth — which will come sooner or later, and may even lead to strong AI — we need "yet another OpenAI".

Why can't the big players make a pivot?

Contemporary LLM technologies are already generating revenue, huge budgets have been spent on their optimization, and the further optimization and profit from it are predictable, even if they don't promise explosive growth. Any new technology will require comparable investments in optimization just to reach parity with current LLMs, while always carrying a significant risk of failure and wasting billions.

The same is true for technologies built on top of LLMs that use them as basic components — the search space for successful solutions is too vast, and the solution may require tuning LLMs in a new direction, which could be orthogonal or even opposite to the current one.

That's why, until the limits of optimization of current hardware and LLM architectures are fully exhausted, significant money won't be invested in searching for alternatives. And we are still far from exhausting the optimization opportunities.

Let's not look far for an example — take GPUs and parallel computing.

Simplifying:

- Was it always obvious that mass parallel computing is a powerful and necessary thing? Of course!

- Did we build expensive supercomputers on existing technologies that tried parallel computing? Yes!

- Did we develop architectures for parallel computing? For decades!

- But the first mass-produced hardware with mass parallelism appeared in narrow niches: for computer games, complex rendering, and science. Because only in these fields it was absolutely necessary. Only when practice confirmed the correctness and validity of the path, and the technology itself quietly overcame its teething problems, did mass adoption begin. For example, browsers started using GPUs for rendering only around 2010.

- Was there a theoretical possibility to invest more billions in the development of "video cards" to achieve comparable results years earlier through scaling R&D? Yes, but no one wanted to take such a risk (besides NVIDIA?) when everything was already working fine. There were many safer directions for investing in progress and profit.

The same is happening with LLMs right now. Until we fully digest all the possibilities they have opened for us, the emergence of something conceptually more powerful is more likely to appear as a lucky accident than as the result of deliberate effort.

Read next

- Pricing model at the start of Feeds Fun monetization

- Prompt engineering: building prompts from business cases

- AI notes 2024: Prognosis

- My GPTs and prompt engineering

- AI notes 2024: The current state

- AI notes 2024: Generative knowledge base

- Two years of writing RFCs — statistics

- Thinking through writing

- Dungeon generation — from simple to complex

- Generation of non-linear quests